1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

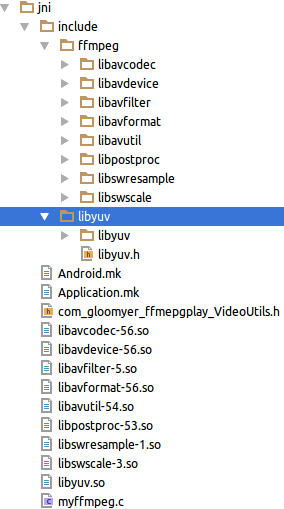

| #include "com_gloomyer_ffmepgplay_VideoUtils.h"

#include "include/ffmpeg/libavcodec/avcodec.h"

#include "include/ffmpeg/libavformat/avformat.h"

#include "include/ffmpeg/libswscale/swscale.h"

#include "include/libyuv/libyuv.h"

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <android/log.h>

#include <android/native_window.h>

#include <android/native_window_jni.h>

#define LOGI(FORMAT, ...) __android_log_print(ANDROID_LOG_INFO,"TAG",FORMAT,##__VA_ARGS__);

#define LOGE(FORMAT, ...) __android_log_print(ANDROID_LOG_ERROR,"TAG",FORMAT,##__VA_ARGS__);

JNIEXPORT void JNICALL Java_com_gloomyer_ffmepgplay_VideoUtils_render

(JNIEnv *env, jclass jcls, jstring input_jstr, jobject suface_jobj) {

const char *input_cstr = (*env)->GetStringUTFChars(env, input_jstr, NULL);

LOGE("%s", "开始执行jni代码");

av_register_all();//注册

LOGE("%s", "注册组件成功");

AVFormatContext *pFormatCtx = avformat_alloc_context();

LOGE("%s", "注册变量");

//2.打开输入视频文件

if (avformat_open_input(&pFormatCtx, input_cstr, NULL, NULL) != 0) {

LOGE("%s", "打开输入视频文件失败");

return;

} else {

LOGE("%s", "打开视频文件成功!");

}

//3.获取视频信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("%s", "获取视频信息失败");

return;

} else {

LOGE("%s", "获取视频信息成功!");

}

int index;

for (int i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

index = i;

break;

}

}

//4.获取视频解码器

AVCodecContext *pCodecCtx = pFormatCtx->streams[index]->codec;

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGE("%s", "无法解码");

return;

} else {

LOGE("%s", "可以正常解码");

}

//5.打开解码器

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("%s", "解码器无法打开");

return;

} else {

LOGE("%s", "解码器打开成功!");

}

//编码数据

AVPacket *pPacket = (AVPacket *) av_malloc(sizeof(AVPacket));

//像素数据(解码数据)

AVFrame *pYuvFrame = av_frame_alloc();

AVFrame *pRgbFrame = av_frame_alloc();

//native绘制

//窗体

ANativeWindow *pNativeWindow = ANativeWindow_fromSurface(env, suface_jobj);

//绘制时的缓冲区

ANativeWindow_Buffer outBuffer;

//6.一阵一阵读取压缩的视频数据AVPacket

int len, got_frame, framecount = 0;

LOGE("%s", "开始一帧一帧解码");

while (av_read_frame(pFormatCtx, pPacket) >= 0) {

//解码AVPacket->AVFrame

len = avcodec_decode_video2(pCodecCtx, pYuvFrame, &got_frame, pPacket);

LOGE("%s len=%d got_frame=%d", "尝试解码", len, got_frame);

if (got_frame) {

LOGI("解码%d帧", framecount++);

//设置缓冲区的属性(宽、高、像素格式)

ANativeWindow_setBuffersGeometry(pNativeWindow,

pCodecCtx->width,

pCodecCtx->height,

WINDOW_FORMAT_RGBA_8888);

//lock

ANativeWindow_lock(pNativeWindow, &outBuffer, NULL);

//设置rgb_frame的属性(像素格式、宽高)和缓冲区

//rgb_frame缓冲区与outBuffer.bits是同一块内存

avpicture_fill((AVPicture *) pRgbFrame,

outBuffer.bits,

PIX_FMT_RGBA,

pCodecCtx->width,

pCodecCtx->height);

I420ToARGB(pYuvFrame->data[0], pYuvFrame->linesize[0],

pYuvFrame->data[2], pYuvFrame->linesize[2],

pYuvFrame->data[1], pYuvFrame->linesize[1],

pRgbFrame->data[0], pRgbFrame->linesize[0],

pCodecCtx->width, pCodecCtx->height);

ANativeWindow_unlockAndPost(pNativeWindow);

usleep(1000 * 16);

}

av_free_packet(pPacket);

}

av_frame_free(&pYuvFrame);

av_frame_free(&pRgbFrame);

avcodec_close(pCodecCtx);

avformat_free_context(pFormatJNIEXPORT void JNICALL Java_com_gloomyer_ffmepgplay_VideoUtils_render

(JNIEnv *env, jclass jcls, jstring input_jstr, jobject suface_jobj) {

const char *input_cstr = (*env)->GetStringUTFChars(env, input_jstr, NULL);

LOGE("%s", "开始执行jni代码");

av_register_all();//注册

LOGE("%s", "注册组件成功");

AVFormatContext *pFormatCtx = avformat_alloc_context();

LOGE("%s", "注册变量");

//2.打开输入视频文件

if (avformat_open_input(&pFormatCtx, input_cstr, NULL, NULL) != 0) {

LOGE("%s", "打开输入视频文件失败");

return;

} else {

LOGE("%s", "打开视频文件成功!");

}

//3.获取视频信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("%s", "获取视频信息失败");

return;

} else {

LOGE("%s", "获取视频信息成功!");

}

int index;

for (int i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

index = i;

break;

}

}

//4.获取视频解码器

AVCodecContext *pCodecCtx = pFormatCtx->streams[index]->codec;

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGE("%s", "无法解码");

return;

} else {

LOGE("%s", "可以正常解码");

}

//5.打开解码器

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("%s", "解码器无法打开");

return;

} else {

LOGE("%s", "解码器打开成功!");

}

//编码数据

AVPacket *pPacket = (AVPacket *) av_malloc(sizeof(AVPacket));

//像素数据(解码数据)

AVFrame *pYuvFrame = av_frame_alloc();

AVFrame *pRgbFrame = av_frame_alloc();

//native绘制

//窗体

ANativeWindow *pNativeWindow = ANativeWindow_fromSurface(env, suface_jobj);

//绘制时的缓冲区

ANativeWindow_Buffer outBuffer;

//6.一阵一阵读取压缩的视频数据AVPacket

int len, got_frame, framecount = 0;

LOGE("%s", "开始一帧一帧解码");

while (av_read_frame(pFormatCtx, pPacket) >= 0) {

//解码AVPacket->AVFrame

len = avcodec_decode_video2(pCodecCtx, pYuvFrame, &got_frame, pPacket);

LOGE("%s len=%d got_frame=%d", "尝试解码", len, got_frame);

if (got_frame) {

LOGI("解码%d帧", framecount++);

//设置缓冲区的属性(宽、高、像素格式)

ANativeWindow_setBuffersGeometry(pNativeWindow,

pCodecCtx->width,

pCodecCtx->height,

WINDOW_FORMAT_RGBA_8888);

//lock

ANativeWindow_lock(pNativeWindow, &outBuffer, NULL);

//设置rgb_frame的属性(像素格式、宽高)和缓冲区

//rgb_frame缓冲区与outBuffer.bits是同一块内存

avpicture_fill((AVPicture *) pRgbFrame,

outBuffer.bits,

PIX_FMT_RGBA,

pCodecCtx->width,

pCodecCtx->height);

I420ToARGB(pYuvFrame->data[0], pYuvFrame->linesize[0],

pYuvFrame->data[2], pYuvFrame->linesize[2],

pYuvFrame->data[1], pYuvFrame->linesize[1],

pRgbFrame->data[0], pRgbFrame->linesize[0],

pCodecCtx->width, pCodecCtx->height);

ANativeWindow_unlockAndPost(pNativeWindow);

usleep(1000 * 16);

}

av_free_packet(pPacket);

}

av_frame_free(&pYuvFrame);

av_frame_free(&pRgbFrame);

avcodec_close(pCodecCtx);

avformat_free_context(pFormatCtx);

ANativeWindow_release(pNativeWindow);

(*env)->ReleaseStringUTFChars(env, input_jstr, input_cstr);JNIEXPORT void JNICALL Java_com_gloomyer_ffmepgplay_VideoUtils_render

(JNIEnv *env, jclass jcls, jstring input_jstr, jobject suface_jobj) {

const char *input_cstr = (*env)->GetStringUTFChars(env, input_jstr, NULL);

LOGE("%s", "开始执行jni代码");

av_register_all();//注册

LOGE("%s", "注册组件成功");

AVFormatContext *pFormatCtx = avformat_alloc_context();

LOGE("%s", "注册变量");

//2.打开输入视频文件

if (avformat_open_input(&pFormatCtx, input_cstr, NULL, NULL) != 0) {

LOGE("%s", "打开输入视频文件失败");

return;

} else {

LOGE("%s", "打开视频文件成功!");

}

//3.获取视频信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("%s", "获取视频信息失败");

return;

} else {

LOGE("%s", "获取视频信息成功!");

}

int index;

for (int i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

index = i;

break;

}

}

//4.获取视频解码器

AVCodecContext *pCodecCtx = pFormatCtx->streams[index]->codec;

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGE("%s", "无法解码");

return;

} else {

LOGE("%s", "可以正常解码");

}

//5.打开解码器

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("%s", "解码器无法打开");

return;

} else {

LOGE("%s", "解码器打开成功!");

}

//编码数据

AVPacket *pPacket = (AVPacket *) av_malloc(sizeof(AVPacket));

//像素数据(解码数据)

AVFrame *pYuvFrame = av_frame_alloc();

AVFrame *pRgbFrame = av_frame_alloc();

//native绘制

//窗体

ANativeWindow *pNativeWindow = ANativeWindow_fromSurface(env, suface_jobj);

//绘制时的缓冲区

ANativeWindow_Buffer outBuffer;

//6.一阵一阵读取压缩的视频数据AVPacket

int len, got_frame, framecount = 0;

LOGE("%s", "开始一帧一帧解码");

while (av_read_frame(pFormatCtx, pPacket) >= 0) {

//解码AVPacket->AVFrame

len = avcodec_decode_video2(pCodecCtx, pYuvFrame, &got_frame, pPacket);

LOGE("%s len=%d got_frame=%d", "尝试解码", len, got_frame);

if (got_frame) {

LOGI("解码%d帧", framecount++);

//设置缓冲区的属性(宽、高、像素格式)

ANativeWindow_setBuffersGeometry(pNativeWindow,

pCodecCtx->width,

pCodecCtx->height,

WINDOW_FORMAT_RGBA_8888);

//lock

ANativeWindow_lock(pNativeWindow, &outBuffer, NULL);

//设置rgb_frame的属性(像素格式、宽高)和缓冲区

//rgb_frame缓冲区与outBuffer.bits是同一块内存

avpicture_fill((AVPicture *) pRgbFrame,

outBuffer.bits,

PIX_FMT_RGBA,

pCodecCtx->width,

pCodecCtx->height);

I420ToARGB(pYuvFrame->data[0], pYuvFrame->linesize[0],

pYuvFrame->data[2], pYuvFrame->linesize[2],

pYuvFrame->data[1], pYuvFrame->linesize[1],

pRgbFrame->data[0], pRgbFrame->linesize[0],

pCodecCtx->width, pCodecCtx->height);

ANativeWindow_unlockAndPost(pNativeWindow);

usleep(1000 * 16);

}

av_free_packet(pPacket);

}

av_frame_free(&pYuvFrame);

av_frame_free(&pRgbFrame);

avcodec_close(pCodecCtx);

avformat_free_context(pFormatCtx);

ANativeWindow_release(pNativeWindow);

(*env)->ReleaseStringUTFChars(env, input_jstr, input_cstr);

}

}Ctx);

ANativeWindow_release(pNativeWindow);

(*env)->ReleaseStringUTFChars(env, input_jstr, input_cstr);

}

|